Hello everyone !

Installation

I am using a Raspberry Pi 4B Rev 1.5 2GB, with the stable version of FreedomBox (installed on a 64GB SanDisk Ultra MicroSD via Raspberry Pi Imager. Works fine, behind a router, DMZ set).

I wanted to use it as a storage device, so i bought 4 SSDs Fanxiang S101Q 1T (they are all the same, bought them x4 on Amazon). They are internal SSDs (I designed a box to put them in, internal ssds are generally smaller and have the exact same size so it’s easier to replace). With them, I’m using a SATA to USB converter.

I just want to note that all SDDs need 1A of power, and i’m using a 27W power supply (5.1V 5A).

Problem Description

The problem is, they all have the exact same ID name (Fanxiang S101Q 1TB (000000000069)).

When only one is connected to the Pi, i can control it, like modify the partitions.

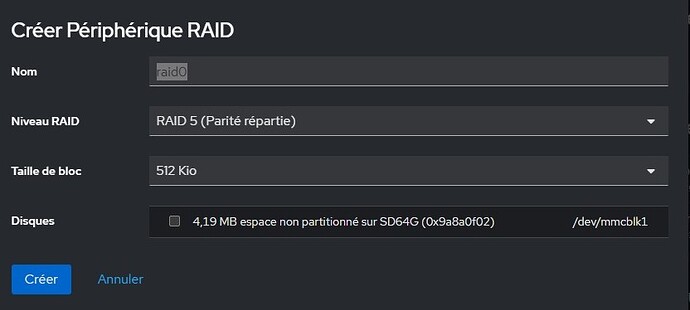

But when 2 or more are plugged, they are “registered” under a single disk, and make control or modifications impossible. I wanted to use them in RAID 5, so i tried to delet all partitions from the disks one by one, then connect all of them, but the os needs to create partitions on disk and is unable to do that, and i don’t know why (he maybe can’t make the difference between the disks).

I tried to modify some disk datas, but they have already have a different serial number, so i don’t know what to do.

If you have any idea…

Thanks by advance